锋利的BeautifulSoup:

BeautifulSoup是Python爬虫里面使用较为广泛的库,其主要功能是从网页中抓取数据,可以使用较少的代码实现完整的数据爬取工作。

BeautifulSoup简介

BeautifulSoup提供一些简单的、python式的函数用来处理导航、搜索、修改分析树等功能。它是一个工具箱,通过解析文档为用户提供需要抓取的数据,因为简单,所以不需要多少代码就可以写出一个完整的应用程序。

pdfkit简介

pdfkit是一个把HTML+CSS格式的文件转换成PDF格式文档的一种工具。它是对html转pdf工具包wkhtmltopdf的封装,所以必须要安装wkhtmltopdf,并将wkhtmltopdf的安装路径配置到系统环境PATH中。

可以参考:pdfkit与wkhtmltopdf的安装与使用

爬取的关键代码

列举了一些共有变量和设置的变量

1

2

3

4

5

6

7

8

9

10

| # 比如爬取python3,网址:http://www.runoob.com/python3/python3-tutorial.html

# 对该url进行拆分,对以下四个参数进行配置,一般只修改language

language = 'python3'

list_tag = '_top'

content_tag = 'content'

path_wkthmltopdf = r'C:\Program Files\wkhtmltopdf\bin\wkhtmltopdf.exe'

# 以下参数不用配置

child_url = '/' + language

url_tag = child_url + '/'

output_name = u"runoob_" + language + r"教程.pdf"

|

get_url_title_list方法:获取python的章节url

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

| def get_url_title_list():

"""

获取所有URL和Title目录列表

:return:

"""

root = root_url

temp_child_url = child_url

resp = requests.get(root + temp_child_url)

resp.encoding = 'utf-8'

soup = BeautifulSoup(resp.text, "html.parser")

x = soup.find("div", class_="design")

x = x.find_all("a", target=list_tag)

title = []

url_path = []

for i in x:

value = i.string.strip()

title.append(value)

temp_href = i.get('href').strip()

if temp_href.find(url_tag) >= 0:

href = root + temp_href

else:

href = root + temp_child_url + '/' + temp_href

url_path.append(href)

return title, url_path

|

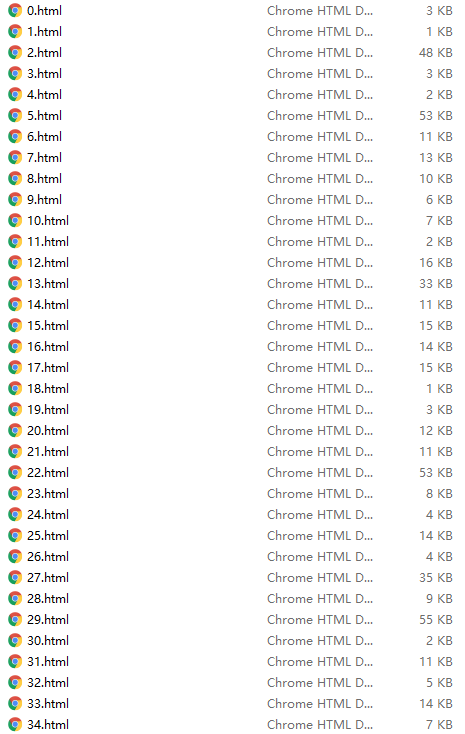

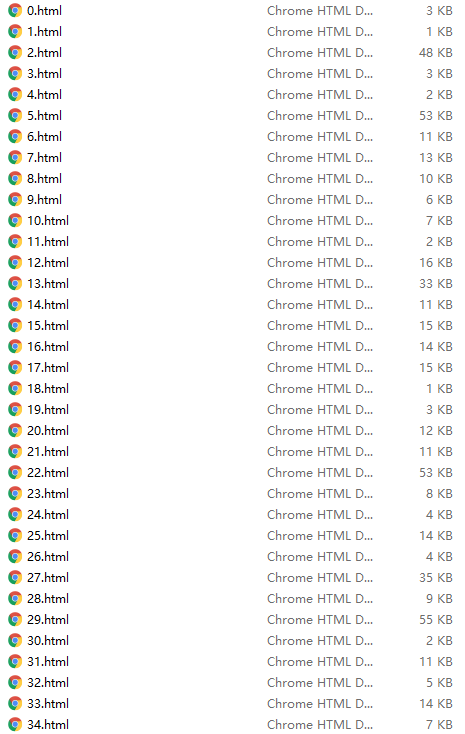

parse_url_to_html方法:将爬取的目标网页存储为html文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

| def parse_url_to_html(url, name):

"""

解析URL,返回HTML内容

:param url:解析的url

:param name: 保存的html文件名

:return: html

"""

try:

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

# 正文

body = soup.find(id=content_tag)

# 标题

title = body.find('h1')

if title is None:

title = body.find_all('h2')[0]

title_tag = soup.new_tag('h1')

title_tag.string = title.string

title = title_tag

# 标题加入到正文的最前面,居中显示

center_tag = soup.new_tag("center")

center_tag.insert(1, title)

body.insert(1, center_tag)

html = str(body)

# body中的img标签的src相对路径的改成绝对路径

pattern = "(<img .*?src=\")(.*?)(\")"

def func(m):

if not m.group(3).startswith("http"):

if m.group(2).find('https') >= 0:

return m.group(1) + m.group(2) + m.group(3)

if m.group(2).find('runoob.com') >= 0:

rtn = m.group(1) + "http:" + m.group(2) + m.group(3)

print(rtn)

else:

rtn = m.group(1) + root_url + m.group(2) + m.group(3)

print(rtn)

return rtn

else:

return m.group(1) + m.group(2) + m.group(3)

html = re.compile(pattern).sub(func, html)

html = html_template.format(content=html)

html = html.encode("utf-8")

with open(name, 'wb') as f:

f.write(html)

return name

except Exception as e:

logging.error("解析错误", exc_info=True)

|

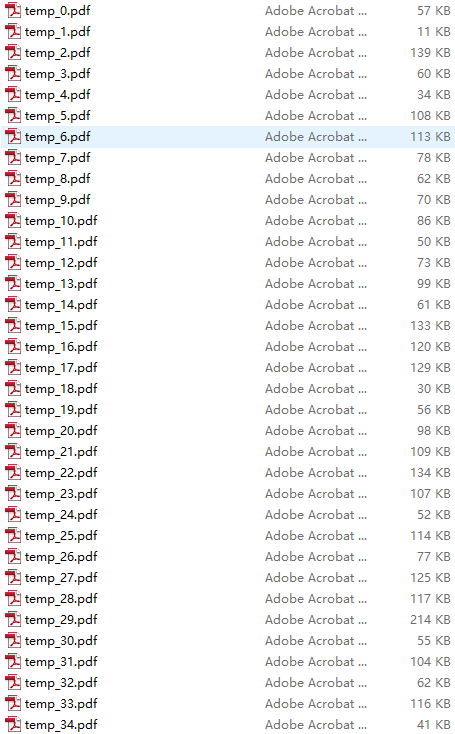

save_pdf方法:将html文件转为pdf文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

| def save_pdf(htmls, file_name):

"""

把所有html文件保存到pdf文件

:param htmls: html文件列表

:param file_name: pdf文件名

:return:

"""

config = pdfkit.configuration(wkhtmltopdf=path_wkthmltopdf)

options = {

'page-size': 'Letter',

'margin-top': '0.75in',

'margin-right': '0.75in',

'margin-bottom': '0.75in',

'margin-left': '0.75in',

'encoding': "UTF-8",

'custom-header': [

('Accept-Encoding', 'gzip')

],

'cookie': [

('cookie-name1', 'cookie-value1'),

('cookie-name2', 'cookie-value2'),

],

'outline-depth': 10,

}

pdfkit.from_file(htmls, file_name, options=options, configuration=config)

|

append_pdf方法:拼接pdf

1

2

3

4

5

6

7

| def append_pdf(input1, output1, bookmark):

bookmark_num = output1.getNumPages()

print(bookmark_num)

for page_num in range(input1.numPages):

output1.addPage(input1.getPage(page_num))

output1.addBookmark(bookmark, bookmark_num)

|

在上述方法执行完成后,执行以下代码,将拼接的pdf输出到本地

1

| output.write(open(output_name, "wb"))

|

完整代码

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

| # -*-coding:utf-8-*-

import os

import re

import time

import sys

import logging

import pdfkit

import requests

from bs4 import BeautifulSoup

from PyPDF2 import PdfFileWriter, PdfFileReader

html_template = """

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

</head>

<body>

{content}

</body>

</html>

"""

root_url = "http://www.runoob.com"

# 针对Runoob.com的爬虫示例

# 列入要对该网站的python3进行爬虫,点开 python3,网址默认为:http://www.runoob.com/python3/python3-tutorial.html

# 对该url进行拆分,对以下四个参数进行配置,一般只修改language

language = 'python3' # 要爬虫的分类------针对不同的内容进行修改

list_tag = '_top' # 对左侧列表的class检索------一般不需要修改

content_tag = 'content' # 对正文的id检索------一般不需要修改

path_wkthmltopdf = r'C:\\Program Files\\wkhtmltopdf\\bin\\wkhtmltopdf.exe' # 本地 wkhtmltopdf 的配置------根据自己电脑情况配置

# 以下参数不用配置

child_url = '/' + language # 定义子url

url_tag = child_url + '/' # url_tag是对左侧列表中的url出现特殊情况下的判断

output_name = u"runoob_" + language + r"教程.pdf" # 爬虫的文件名

def parse_url_to_html(url, name):

"""

解析URL,返回HTML内容

:param url:解析的url

:param name: 保存的html文件名

:return: html

"""

try:

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

# 正文

body = soup.find(id=content_tag)

# 标题

title = body.find('h1')

if title is None:

title = body.find_all('h2')[0]

title_tag = soup.new_tag('h1')

title_tag.string = title.string

title = title_tag

# 标题加入到正文的最前面,居中显示

center_tag = soup.new_tag("center")

center_tag.insert(1, title)

body.insert(1, center_tag)

html = str(body)

# body中的img标签的src相对路径的改成绝对路径

pattern = "(<img .*?src=\")(.*?)(\")"

def func(m):

if not m.group(3).startswith("http"):

if m.group(2).find('https') >= 0:

return m.group(1) + m.group(2) + m.group(3)

if m.group(2).find('runoob.com') >= 0:

rtn = m.group(1) + "http:" + m.group(2) + m.group(3)

print(rtn)

else:

rtn = m.group(1) + root_url + m.group(2) + m.group(3)

print(rtn)

return rtn

else:

return m.group(1) + m.group(2) + m.group(3)

html = re.compile(pattern).sub(func, html)

html = html_template.format(content=html)

html = html.encode("utf-8")

with open(name, 'wb') as f:

f.write(html)

return name

except Exception as e:

logging.error("解析错误", exc_info=True)

# 要去除所有的div标签

# 移除div(如果要移除a标签,把div换成a即可)

# remove_tag(html, "div")

def remove_tag(text, tag):

return text[:text.find("<" + tag + ">")] + text[text.find("</" + tag + ">") + len(tag) + 3:]

def get_url_title_list():

"""

获取所有URL和Title目录列表

:return:

"""

root = root_url

temp_child_url = child_url

resp = requests.get(root + temp_child_url)

resp.encoding = 'utf-8'

soup = BeautifulSoup(resp.text, "html.parser")

x = soup.find("div", class_="design")

x = x.find_all("a", target=list_tag)

title = []

url_path = []

for i in x:

value = i.string.strip()

title.append(value)

temp_href = i.get('href').strip()

if temp_href.find(url_tag) >= 0:

href = root + temp_href

else:

href = root + temp_child_url + '/' + temp_href

url_path.append(href)

return title, url_path

def save_pdf(htmls, file_name):

"""

把所有html文件保存到pdf文件

:param htmls: html文件列表

:param file_name: pdf文件名

:return:

"""

config = pdfkit.configuration(wkhtmltopdf=path_wkthmltopdf)

options = {

'page-size': 'Letter',

'margin-top': '0.75in',

'margin-right': '0.75in',

'margin-bottom': '0.75in',

'margin-left': '0.75in',

'encoding': "UTF-8",

'custom-header': [

('Accept-Encoding', 'gzip')

],

'cookie': [

('cookie-name1', 'cookie-value1'),

('cookie-name2', 'cookie-value2'),

],

'outline-depth': 10,

}

pdfkit.from_file(htmls, file_name, options=options, configuration=config)

def append_pdf(input1, output1, bookmark):

bookmark_num = output1.getNumPages()

print(bookmark_num)

for page_num in range(input1.numPages):

output1.addPage(input1.getPage(page_num))

output1.addBookmark(bookmark, bookmark_num)

def main():

output = PdfFileWriter()

start = time.time()

file_name = u"temp_"

result = get_url_title_list()

titles = result[0]

urls = result[1]

print(titles)

print(urls)

for index, url in enumerate(urls):

parse_url_to_html(url, str(index) + ".html")

htmls = []

pdfs = []

for i in range(0, len(urls)):

htmls.append(str(i) + '.html')

pdfs.append(file_name + str(i) + '.pdf')

save_pdf(str(i) + '.html', file_name + str(i) + '.pdf')

print(u"转换完成第" + str(i) + '个html')

i = 0

for pdf in pdfs:

fd = open(pdf, 'rb')

append_pdf(PdfFileReader(fd), output, titles[i])

i = i + 1

print(u"合并完成第" + str(i) + '个pdf' + pdf)

output.write(open(output_name, "wb"))

print(u"输出PDF成功!")

for html in htmls:

os.remove(html)

print(u"删除临时文件" + html)

for pdf in pdfs:

os.remove(pdf)

print(u"删除临时文件" + pdf)

total_time = time.time() - start

print(u"总共耗时:%f 秒" % total_time)

if __name__ == '__main__':

try:

main()

except OSError as err:

print("OS error: {0}".format(err))

except ValueError:

print("Could not convert data to an integer.")

finally:

print("Unexpected error:", sys.exc_info()[0])

|

存在的问题

在删除中间生成的pdf时,报了一个错误(OS error: [WinError 32]另一个程序正在使用此文件,进程无法访问。:'temp_0.pdf'),提示文件正在使用中,删除pdf文件失败。由于正在学习python,在多次尝试解决该问题,都以失败告终,等技能提升之后再进行修复~

如果有不明白的,欢迎留言或者给我 发邮件[Send Email]

上一篇:从零开始搭建自己的博客天地(上)

下一篇:Python3入门学习—02条件循环语句